A/B Test Reports

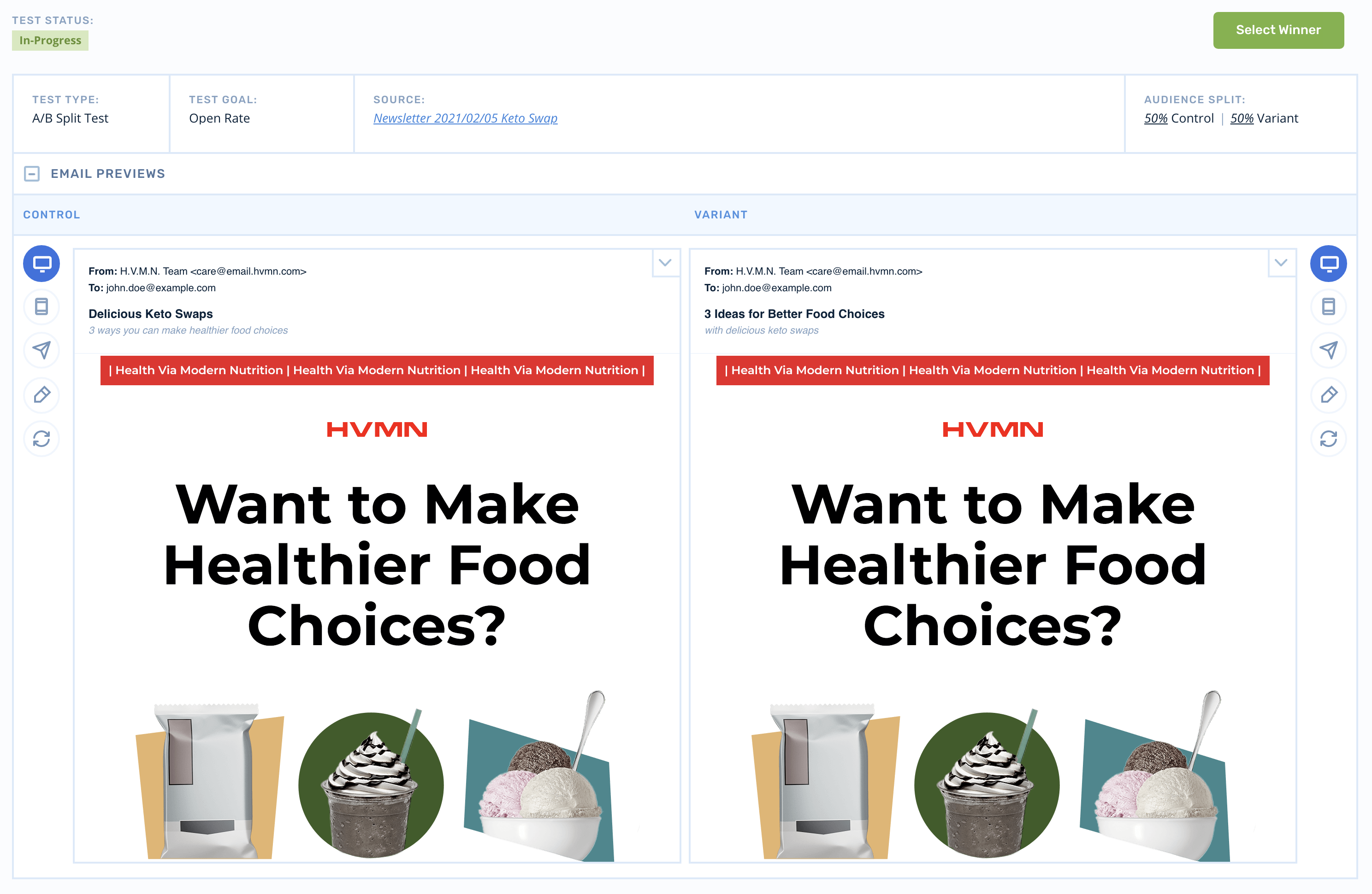

A/B Testing provides a way to send different emails/campaigns to different groups of people and compare which one performed better. Once a winner is decided, that email/campaign will be used for all future customers who pass through the node.

We have four different types of tests: split, holdout, branch, and broadcast. Each one generally follows the same principles and records the same data.

A/B Test Reporting Basics

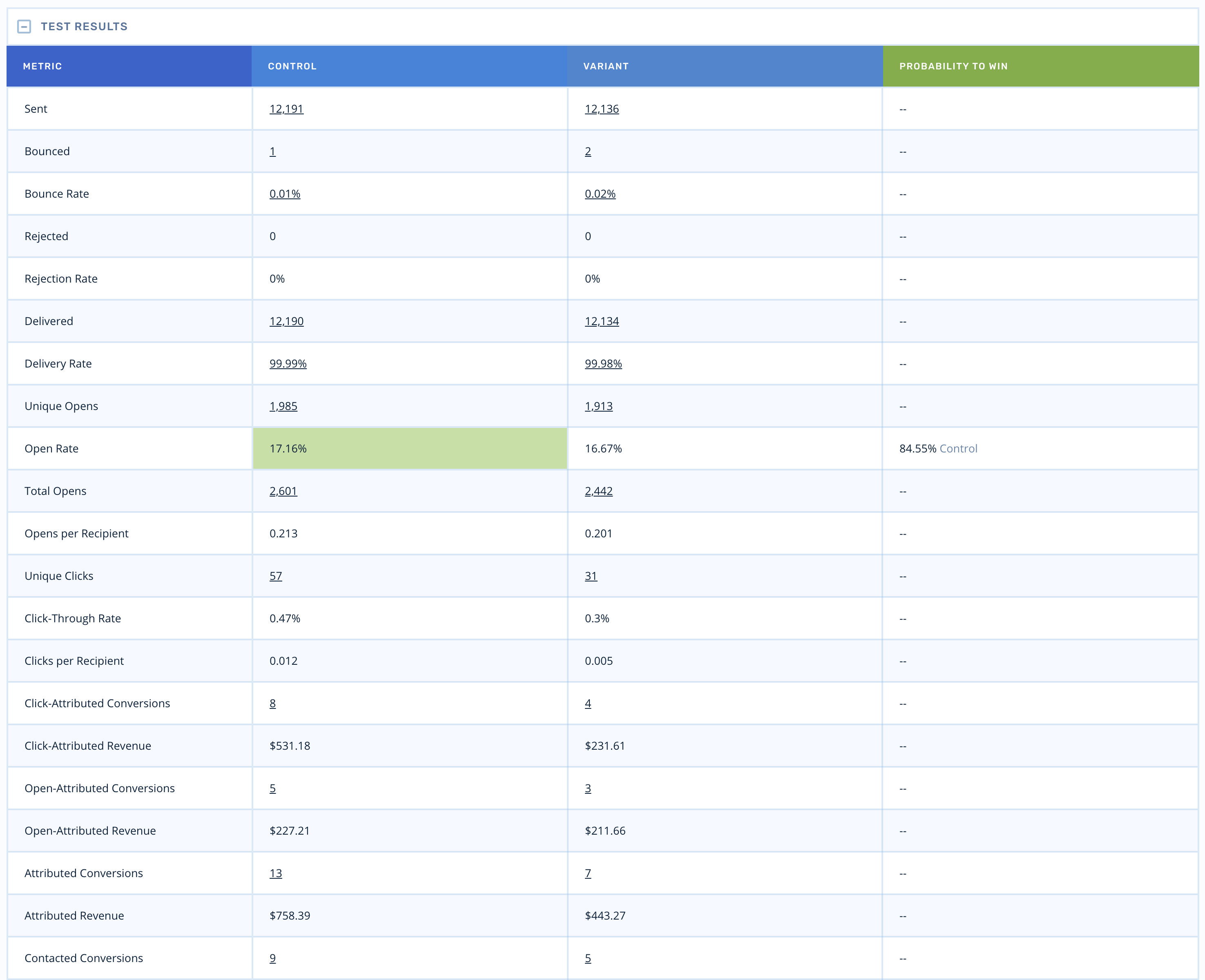

At the very basic level, an A/B test has two groups of customers and we measure the total number of trials and the number of successes for each group. These are then used to calculate which group has performed better and ultimately make a decision that one group is better than the other. We also record a complete set of engagement metrics about emails sent for each group.

Calculating Probability

Probability to Win

Probability Control Beats Variant

The probability that the control group beats the variant group is calculated using the closed-form Bayesian statistics model derived by Evan Miller. For more information, see his site here: https://www.evanmiller.org/bayesian-ab-testing.html

Decision Formula

The decision to end a test is based on the above formula and takes into account the number of total trials. This methodology was derived by Chris Stucchio and is defined here: https://www.chrisstucchio.com/blog/2014/bayesian_ab_decision_rule.html

The gist of the decision formula is that we calculate how much we’d expect to lose if the group that we pick as the winner is actually the wrong choice. We calculate a metric for this and when it is below a certain threshold, we can be confident that we are picking the winner, or at the very least, our losses are minimized if we accidentally picked the worse group as the winner.

Updated 4 months ago